The TPACK Framework: A Comprehensive Analysis of Theory, Practice, and Critique

The Technological Pedagogical Content Knowledge (TPACK) framework stands as a central theoretical construct in the field of educational technology. It provides a nuanced lens for understanding the complex knowledge teachers require to effectively integrate technology into their practice. However, the framework was not conceived in a theoretical vacuum; its development represents a critical evolutionary step, building upon a rich history of research into the nature of teacher knowledge. To fully grasp TPACK, one must first understand its intellectual progenitor: Pedagogical Content Knowledge.

The Foundational Paradigm: Lee Shulman’s Pedagogical Content Knowledge (PCK)

In the mid-1980s, educational researcher Lee Shulman identified a significant blind spot in the study of teaching. Prevailing research had created a false dichotomy, treating a teacher’s knowledge of their subject matter and their general skills in teaching as separate, mutually exclusive domains. Shulman argued against this fragmented view, proposing what he termed Pedagogical Content Knowledge (PCK) as the “missing paradigm” in teacher education research.

PCK is defined as a specialized form of professional knowledge, a unique “amalgam” or “blend” of content and pedagogy that is the signature of an expert teacher. It is this knowledge that distinguishes a teacher from a non-teaching subject matter specialist, for example, what makes a history teacher different from a historian. Shulman asserted that a teacher’s expertise lies in their capacity to transform their own deep content knowledge into forms that are “pedagogically powerful,” making the subject comprehensible and accessible to a diverse range of students.

The core of Shulman’s original conception of PCK includes several key elements. First is a knowledge of the most useful representations for the content being taught, including powerful analogies, illustrations, examples, and demonstrations. Second is a deep understanding of what makes specific topics easy or difficult for students to learn. This involves anticipating common student preconceptions and misconceptions and possessing a repertoire of strategies to address these learning challenges.

Shulman situated PCK within a broader, seven-category knowledge base for teaching, which also encompassed content knowledge, curriculum knowledge, general pedagogical knowledge, knowledge of learners and their characteristics, knowledge of educational contexts, and knowledge of educational ends, purposes, and values. This comprehensive view underscores that, from its inception, the concept of specialized teacher knowledge was understood to be multifaceted and deeply influenced by context. While strong evidence supports PCK as a cornerstone of effective teaching, the concept has not been without critique. Some have noted a “general fuzziness” around its conceptualization and boundaries, a theme that would later resurface in discussions of its technological successor.

The Advent of TPACK: Mishra and Koehler’s Extension for a Digital Age

In the early 2000s, education was being reshaped by the proliferation of digital technologies. Researchers Punya Mishra and Matthew J. Koehler recognized that Shulman’s foundational model, while still essential, was under-theorized for an era in which teaching was increasingly mediated by complex digital tools. They argued that a new domain of knowledge was required to account for the unique challenges and opportunities presented by technology.

The rationale for this extension rests on the fundamental difference between traditional and modern educational technologies. Traditional tools like a pencil, chalkboard, or microscope are typically characterized by specificity, stability, and transparency of function. In contrast, newer digital technologies are often “protean, unstable, and opaque”. Their functions are malleable, they change rapidly, and their inner workings are not obvious to the user. These characteristics present novel pedagogical challenges and affordances that demand a distinct form of teacher knowledge.

Following a five-year design-based research study aimed at understanding how educators develop rich uses of technology, Mishra and Koehler proposed their framework in 2006. Initially named TPCK (Technological Pedagogical Content Knowledge), it was later renamed TPACK to be more easily pronounceable and to better symbolize the integrated, holistic nature of the construct. Since its introduction, the TPACK framework has become one of the leading theories guiding research, professional development, and practice in educational technology integration.

Deconstructing the Seven Knowledge Domains of TPACK

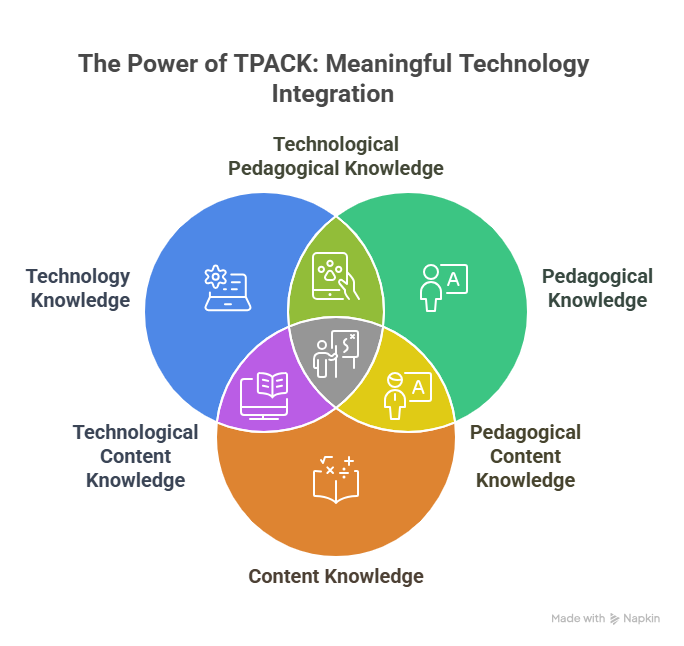

The TPACK framework is most famously represented by a Venn diagram showing three primary circles of knowledge—Technology, Pedagogy, and Content—and the four intersecting domains that arise from their interplay. This structure yields seven distinct but interrelated knowledge domains.

- The Three Core Domains:

- Content Knowledge (CK): Refers to the teacher’s knowledge of the subject matter they are responsible for teaching. This extends beyond factual knowledge to include concepts, theories, evidence, organizational frameworks, and the established practices and methods of inquiry within a discipline. For example, a science teacher’s CK includes not only knowledge of facts and theories but also an understanding of the scientific method and evidence-based reasoning.

- Pedagogical Knowledge (PK): Encompasses the teacher’s deep knowledge about the processes, practices, and methods of teaching and learning. This is a generic form of knowledge that includes understanding learning theories, student learning styles, classroom management strategies, lesson planning techniques, and assessment methods. For instance, knowing how to structure a jigsaw activity to foster both individual accountability and group collaboration is an expression of PK.

- Technological Knowledge (TK): Describes a teacher’s knowledge of and ability to use a wide array of technologies, from low-tech tools like a pencil to advanced digital resources like the internet, software programs, and interactive whiteboards. Crucially, TK is not static; it is a fluid and constantly evolving knowledge base that requires continuous learning. It also involves the wisdom to recognize when a particular technology will assist or impede student learning.

- The Four Intersecting Domains:

- Pedagogical Content Knowledge (PCK): The intersection of pedagogy and content, consistent with Shulman’s original formulation. It is the specialized knowledge for teaching specific content, which includes knowing how to represent topics in ways that are understandable to learners and anticipating and addressing common student misconceptions.

- Technological Content Knowledge (TCK): The intersection of technology and content. It is an understanding of the reciprocal relationship between technology and content—how technology can be used to represent and create new representations of content, and how content can be altered or constrained by technology. A chemistry teacher using a simulation to allow students to visualize and manipulate molecular structures in 3D, a task impossible with a 2D textbook diagram, is demonstrating TCK.

- Technological Pedagogical Knowledge (TPK): The intersection of technology and pedagogy. It is an understanding of how teaching and learning can change when particular technologies are used. This includes knowing the affordances and constraints of various tools for different pedagogical approaches, such as using a collaborative online platform to facilitate a student-centered, inquiry-based project.

- Technological Pedagogical Content Knowledge (TPACK): The central domain, representing the synthesis of all three core knowledge areas. TPACK is an emergent form of knowledge that is greater than the sum of its parts.9 It is the nuanced and flexible knowledge that allows a teacher to select, orchestrate, and evaluate the use of specific technologies to teach specific content via specific pedagogical methods in a given context. It represents true, meaningful technology integration.

A common visual representation of TPACK is the Venn diagram, which implies that the domains are distinct bodies of knowledge that overlap. This can be interpreted as an intersectional model. However, a deeper reading of the theory reveals a more dynamic relationship. The originators describe a “dynamic, transactional relationship” between the components, where each domain mutually influences and transforms the others in the authentic act of teaching. This transactional view suggests that TPACK is not a static body of knowledge to be possessed, but an emergent form of knowing-in-action. This distinction has profound implications.

An intersectional view might lead to professional development that teaches technology skills in isolation, hoping teachers will connect them to their practice later. A transactional view demands that teacher learning be situated in authentic design problems where these relationships must be negotiated simultaneously, fostering a more integrated and applicable form of knowledge.

Table 1: The Seven Domains of the TPACK Framework

| Domain & Acronym | Core Definition | Key Teacher Competencies | Concrete Classroom Example |

| Content Knowledge (CK) | Knowledge of the subject matter, including facts, concepts, theories, and disciplinary frameworks. | – Demonstrates deep understanding of the discipline.- Knows the central ideas and organizational structures of the content.- Understands the rules of evidence and proof within the field. | A history teacher understands not just the dates of the Civil War, but also the complex economic, social, and political factors that caused it. |

| Pedagogical Knowledge (PK) | Knowledge of the processes, practices, and methods of teaching and learning, independent of content. | – Manages the classroom effectively.- Plans and structures lessons.- Understands different learning theories and student learning styles.- Uses a variety of assessment strategies. | A teacher organizes students into a jigsaw activity, making each student responsible for a piece of the content that they must then teach to their peers. |

| Technological Knowledge (TK) | Knowledge about various technologies, from low-tech to digital, and the ability to use them productively and adapt to new ones. | – Can operate various hardware and software.- Stays current with new technology offerings.- Understands when technology can support or hinder learning.- Troubleshoots basic technology problems. | A teacher is proficient in using the school’s Learning Management System (LMS) to post assignments and communicate with students. |

| Pedagogical Content Knowledge (PCK) | The blend of content and pedagogy, knowledge of how to teach specific subject matter effectively. | – Adapts content for diverse learners.- Selects effective teaching strategies for specific topics.- Anticipates and addresses student misconceptions.- Uses powerful analogies and examples. | A math teacher uses physical manipulatives (like fraction bars) to help students grasp the abstract concept of equivalent fractions. |

| Technological Content Knowledge (TCK) | Understanding how technology and content influence and constrain one another; how technology can represent content in new ways.. | – Selects technologies that best represent specific content.- Understands how technology can change the nature of the content.- Uses technology to help students access and process content. | A science teacher uses a virtual reality (VR) field trip to allow students to explore the ancient pyramids of Egypt, providing an immersive experience of historical content. |

| Technological Pedagogical Knowledge (TPK) | Understanding how teaching and learning can change when particular technologies are used in particular ways. | – Chooses technologies that align with pedagogical goals.- Understands the pedagogical affordances and constraints of tools.- Manages a technology-rich classroom environment. | A teacher uses an online polling tool like Nearpod to gather real-time formative assessment data from all students simultaneously, rather than calling on one student at a time. |

| Technological Pedagogical Content Knowledge (TPACK) | The emergent, integrated knowledge of using technology and pedagogy to teach content in a context-specific manner. | – Flexibly orchestrates content, pedagogy, and technology.- Designs effective, technology-enhanced learning experiences.- Selects appropriate tools for specific learning objectives.- Adapts practice based on context. | A life sciences teacher has students use tablets (TK) to create short, personified videos explaining the function of a cell organelle (PK) to demonstrate their understanding of cell anatomy (CK). |

The Overarching Influence: Contextual Knowledge (XK)

A critical evolution of the TPACK framework has been the increasing formalization of context. In early diagrams, context was often represented by a dotted circle encompassing the entire model, acknowledging its influence but leaving it somewhat undefined. Recognizing its central importance, Mishra proposed in 2019 to explicitly label this outer layer as Contextual Knowledge, or XK.

Context is not a monolithic entity. It operates at multiple levels: the micro level of the specific classroom and students, the meso level of the school and district, and the macro level of national and global policies and culture. This knowledge encompasses a vast range of factors, including student characteristics, available resources, school and district policies, parental concerns, and community values.

The explicit inclusion of context reinforces a crucial aspect of the framework: TPACK is highly situated. As one analysis notes, TPACK is “unique, temporary, situated, idiosyncratic, adaptive, and specific, and will be different for each teacher in each situation”. A lesson plan that represents masterful TPACK in a well-resourced suburban school might be an utter failure in a rural school with limited internet access. This situated nature complicates any notion of a one-size-fits-all “best practice” for technology integration.

This evolution, however, has also highlighted a core conceptual tension. The literature has been inconsistent in its treatment of context, sometimes viewing it as the set of external factors influencing a teacher’s knowledge, and at other times treating it as a domain of knowledge that a teacher possesses. This ambiguity is a significant source of the “fuzzy boundaries” critique leveled against the framework. Without a clear and agreed-upon ontological status for context, it becomes exceedingly difficult to operationalize and measure its role, posing a barrier to the framework’s maturation from a powerful heuristic to a fully robust scientific theory.

The Application of TPACK in Instructional Design and Practice

Moving from the theoretical architecture to its practical application, the TPACK framework serves as a powerful heuristic for designing meaningful, technology-integrated learning experiences. It is not a prescriptive recipe but a reflective tool that guides educators toward more intentional and effective instructional choices.

TPACK as a Heuristic for Reflective Lesson Planning

The primary value of TPACK in practice is its function as a guide for reflective lesson planning. It fundamentally reorients the planning process away from a “technocentric” approach, where a teacher finds a “cool tool” and then builds a lesson around it. Instead, the framework insists that planning begin with the learning goals (Content Knowledge) and the pedagogical strategies best suited to help students achieve them (Pedagogical Knowledge). Only then is technology layered in, with the teacher asking critical questions about how a specific tool can purposefully enhance the content and pedagogy. This intentional process, which must also consider the specific classroom context, helps teachers make choices that truly maximize student learning rather than using technology for its own sake.

Case Study in Practice: History/Social Studies

A compelling example of TPACK in a history classroom is a project-based unit titled “Humans of the Civil War,” designed for middle or high school students.. This lesson moves beyond a traditional focus on dates and battles to cultivate historical empathy and an understanding of multiple perspectives.

- Content Knowledge (CK): The learning objectives are for students to understand the diverse lived experiences of individuals during the American Civil War—including soldiers of different ranks, male and female civilians, and enslaved persons. Students are expected to analyze primary and secondary sources to understand the war’s causes and effects from a human-centered perspective..

- Pedagogical Knowledge (PK): The lesson is structured around an inquiry-based and project-based learning model. This pedagogy prioritizes student agency and creativity, as students can choose a historical figure to research.. The primary learning activities are research, role-playing, and storytelling, rather than teacher-led direct instruction..

- Technology Integration (TPACK):

- Research Phase (TCK): Students utilize digital archives, online museum collections, and curated historical websites to conduct research on their chosen individual. Technology provides access to a wealth of primary source documents, letters, and photographs that would be inaccessible in a standard textbook.. This is a clear demonstration of Technological Content Knowledge: using technology to access, analyze, and represent historical content.

- Creation Phase (TPACK): The culminating task requires students to create a digital artifact, such as a storyboard or a short video, where they role-play their historical figure being interviewed. They might use tools like Storyboard That, a simple video editor on a tablet, or a web-based presentation tool. In this phase, the technology (TK) is not merely a container for information; it is the medium for a specific pedagogical task—storytelling and role-playing (PK)—designed to demonstrate a deep understanding of historical content and perspective (CK). This synthesis of all three domains to create a novel and meaningful learning task is the essence of TPACK.

Case Study in Practice: Science

In a high school biology class, TPACK can be applied to transform the study of a complex process like photosynthesis from a passive exercise in memorization to an active investigation.

- Content Knowledge (CK): The learning objectives are for students to identify the reactants (CO2, H2O) and products (glucose, O2) of photosynthesis, explain the role of light and chlorophyll, and analyze how environmental factors like light intensity and carbon dioxide concentration affect the rate of the reaction.

- Pedagogical Knowledge (PK): The lesson is structured using a constructivist, 5E (Engage, Explore, Explain, Elaborate, Evaluate) model. This pedagogical approach emphasizes inquiry and discovery, where students construct their own understanding through investigation.

- Technology Integration (TPACK):

- Explore Phase (TPACK): Students work in pairs using an online photosynthesis simulation. This technology (TK) allows them to conduct virtual experiments, manipulating variables like light intensity and CO2 levels—factors that are difficult and time-consuming to control in a physical lab setting. They can observe the immediate effect on the rate of oxygen production, collecting data directly from the simulation. This demonstrates powerful TCK, as the technology provides a new way to represent and interact with scientific content (CK). The pedagogical design (PK) is inquiry-based; students are tasked with discovering the relationships between variables, not simply being told them. The technology enables a pedagogical approach that would otherwise be impractical, fostering deep conceptual understanding.

- Elaborate Phase (TCK): To extend their learning, students can use data visualization tools like spreadsheet software to graph the data collected from their simulations. This allows them to mathematically analyze the relationships they observed. This use of technology to analyze and represent scientific data is a further demonstration of TCK that, when integrated within the 5E lesson structure, contributes to a rich TPACK-informed experience.

These case studies reveal a critical pattern. Effective TPACK is not about using technology to make traditional teaching methods more efficient, such as using a presentation software instead of an overhead projector for a lecture. It is about fundamentally transforming the nature of the student’s task. In the history example, students shift from learning about historical figures to acting as historical researchers and storytellers. In the science example, they move from reading about photosynthesis to conducting experiments and analyzing data like scientists. This demonstrates that the ultimate goal of TPACK is not merely to enhance instruction but to foster disciplinary practice. It allows teachers to design learning environments where students engage in the authentic habits of mind and work of the field they are studying, cultivating critical thinking, collaboration, and creativity.

Methodologies for Assessing Teacher TPACK

Given the complexity and situated nature of the TPACK framework, developing valid and reliable methods to assess a teacher’s knowledge has proven to be a significant challenge for the field. Assessing TPACK is not as simple as administering a standardized test, because the knowledge itself is most authentically reflected through a teacher’s planning, actions, and reflections.. The absence of a single, universally accepted assessment method has complicated efforts to track teacher growth and provide targeted professional development. Researchers have therefore developed a variety of quantitative and qualitative approaches, each with distinct strengths and limitations.

Quantitative Approaches: Self-Report Surveys

The most common method for assessing TPACK, particularly in large-scale studies, is the self-report survey.. The most prominent of these is the “Survey of Preservice Teachers’ Knowledge of Teaching and Technology,” developed by Schmidt, Baran, Thompson, and colleagues in 2009.. This instrument consists of a series of Likert-type items designed to measure a teacher’s self-perception of their knowledge across all seven TPACK domains and within specific content areas like math, science, and literacy. The survey has demonstrated high internal consistency and has been widely adapted for use in various contexts.

Despite their widespread use, self-report surveys are subject to a significant and well-documented critique: they are more likely to measure a teacher’s confidence or self-efficacy than their actual competence in practice.. Research has shown that gains on these surveys following a professional development program often reflect an increase in teachers’ confidence regarding the topic, rather than a demonstrable improvement in their classroom instruction.. This “confidence vs. competence” gap represents a major validity concern for a large portion of the quantitative research on TPACK, suggesting that findings based solely on these instruments should be interpreted with considerable caution.

Performance-Based and Qualitative Approaches

To address the limitations of surveys, researchers have turned to performance-based and qualitative methods that aim to capture TPACK as it is enacted in practice. These methods provide a richer, more authentic picture of a teacher’s knowledge.

- Observations: Direct classroom observation allows an assessor to see TPACK in action. However, inferring the underlying knowledge and reasoning solely from observed behavior is difficult, as the teacher’s decision-making process remains invisible.. Therefore, observations are most powerful when triangulated with other data sources, particularly pre- and post-observation interviews where the teacher can articulate the rationale behind their instructional choices. Structured observation checklists, often adapted from Shulman’s work on PCK, can help focus the observation on specific elements like comprehension, transformation of content, and instructional strategies.

- Analysis of Teaching Artifacts: A more feasible and scalable alternative to extensive observation is the analysis of teaching artifacts, most notably lesson plans.. Researchers have developed detailed rubrics to systematically assess the quality of technology integration and the level of TPACK demonstrated within an instructional plan.. These rubrics typically evaluate the pedagogical “fit” between the selected content, teaching strategies, and technologies, in relation to the stated curriculum goals..

- Portfolio Assessment: Portfolios offer a longitudinal method for assessing TPACK development over time. A teacher’s TPACK portfolio would be a curated collection of evidence, such as a series of lesson plans, video recordings of instruction, samples of student work created with technology, and—most importantly—the teacher’s own written reflections. These reflections provide a crucial window into their pedagogical reasoning and decision-making processes. Evaluation criteria for such a portfolio would focus not just on the quality of the artifacts but on demonstrated growth and the depth of reflection over time. While promising, the use of portfolios to assess

teacher TPACK is less developed in the literature compared to their use for assessing student learning, representing an area for future research.

The diversity of these assessment tools reveals a deeper conceptual issue. The choice of an assessment instrument implicitly defines what “counts” as TPACK. A survey defines it as a set of self-perceived skills; a lesson plan rubric defines it as a design capacity; an observation protocol defines it as an enacted performance. This lack of a unified assessment approach perpetuates the “fuzzy boundaries” critique of the framework. If researchers and practitioners are using different tools that measure different facets of the construct, they are, in effect, studying and discussing different things under the same “TPACK” label. This fragmentation makes it difficult to build a coherent body of knowledge about how TPACK truly develops and how best to support it, highlighting the need for more integrated assessment models that capture planning, enactment, and reflection.

Table 2: Summary of TPACK Assessment Methodologies

| Methodology | Format/Instrument | Primary Use Case | Key Strengths | Documented Limitations | |

| Self-Report Surveys | Likert-scale survey (e.g., Schmidt et al., 2009) | Large-scale research; measuring self-efficacy and confidence. | – Scalable and easy to administer.- Allows for quantitative analysis. | – Measures confidence, not necessarily competence.. | – Lacks context.- Subject to self-report biases. |

| Classroom Observations | Observation checklists combined with pre/post interviews | Evaluating enacted practice; professional development feedback. | – Authentic; captures TPACK in action.- Provides rich, contextualized data. | – Observer cannot see the teacher’s reasoning without interviews.. | – Time-consuming and resource-intensive.- Subject to observer bias. |

| Artifact Analysis | Rubrics for assessing lesson plans or other teaching artifacts (e.g., TIAI) . | Assessing planning and design capacity; evaluating outputs of professional development. | – Examines pedagogical reasoning and intent.- More scalable than observation.- Can be standardized with rubrics. | – Does not show classroom enactment or student impact.- Quality of assessment depends on the detail of the artifact. | |

| Portfolio Assessment | Curated collection of artifacts (plans, videos, reflections) with evaluation criteria | Documenting professional growth over time; formative and summative evaluation. | – Holistic; shows development and reflection.- Teacher-driven and empowering.- Provides authentic evidence of practice. | – Highly resource-intensive for both teacher and assessor.- Scoring can be subjective.- Less developed as a formal TPACK assessment method. |

A Critical Examination of the TPACK Framework

Despite its widespread adoption and influence, the TPACK framework is the subject of a robust and ongoing critical discourse. These critiques do not necessarily invalidate the framework but rather point to conceptual ambiguities, practical challenges, and areas needing further development to enhance its utility for researchers and practitioners.

Conceptual Ambiguity: The “Fuzzy Boundaries” Problem

A primary and persistent critique leveled against TPACK is the lack of clear, distinct boundaries between its constituent knowledge domains, particularly the intersecting areas of TPK, TCK, and TPACK itself.. Researchers have noted the difficulty of empirically distinguishing these constructs from one another in practice. This conceptual “fuzziness” has led to a proliferation of definitions—one analysis found 89 different definitions of TPACK in the literature—and a state of semantic inconsistency that complicates research and theory-building. The inconsistent treatment of “context,” as previously discussed, is a major contributor to this ambiguity.

The Risk of Technocentrism and the Role of Content

While the framework’s authors advocate for starting with content and pedagogy, critics argue that its visual representation can inadvertently promote a technocentric viewpoint. Placing Technology (TK) in a prominent position, such as the top circle in many diagrams, can subtly privilege it over the other domains. This can encourage the very practice the framework seeks to avoid: teachers becoming excited about a new technology and designing a lesson around the tool, rather than selecting a tool in service of specific learning goals. Furthermore, some argue that the framework can downplay the foundational role of deep Content Knowledge (CK) in curriculum planning, failing to adequately safeguard essential learning objectives from being overshadowed by the technological bells and whistles.

From Theory to Practice: The “Wicked Problem” of Integration

A frequent criticism from practitioners is that the framework, while conceptually appealing, can be too theoretical and abstract to offer concrete, actionable guidance for implementation in diverse classroom settings. This gap between theory and practice is illuminated by framing technology integration as a “wicked problem”—a class of complex, ill-structured problems that have no simple or universal solutions due to unique contextual constraints and stakeholder needs.

Viewing TPACK implementation through this lens helps to reframe the challenges. The issue is not simply a failure to overcome barriers, but an ongoing process of managing inherent complexity. This perspective suggests that effective technology integration requires teachers to act as “bricoleurs” or “personalized curriculum designers,” creatively tinkering with and adapting their knowledge, beliefs, and tools to fit their specific context. While this empowers teachers, it also highlights the immense difficulty of creating scalable, transferable “best practices” and underscores the significant time and cognitive load placed upon educators. This reframing shifts the focus of leadership and policy from seeking a single, replicable “solution” to fostering adaptive systems that support teachers in the continuous, situated problem-solving that effective TPACK demands.

Systemic Barriers to TPACK Implementation

The practical application of TPACK is often hindered by a host of systemic barriers that exist at both the institutional and individual levels.

- Institutional and External Factors: These include a lack of adequate resources, such as outdated hardware, unreliable internet access, and insufficient technical support. A lack of clear administrative vision, supportive policies, and dedicated time for teachers to plan, collaborate, and learn are also significant obstacles. Furthermore, professional development is often ineffective, focusing on isolated technology skills in a “technocentric” manner rather than on integrated practice.

- Teacher and Internal Factors: Individual factors also play a crucial role. Teachers may suffer from a lack of confidence or “technophobia,” leading to a resistance to change. The established pedagogical beliefs and practices of experienced teachers can limit their willingness to experiment with new, technology-supported strategies.. A general lack of digital literacy and, critically, a lack of creative ideas for designing meaningful technology-enhanced tasks are also commonly cited challenges.

These critiques are not isolated; they are interconnected in a reinforcing cycle. The fundamental conceptual fuzziness makes it difficult to develop reliable assessment methods. The lack of clear assessment makes it nearly impossible to provide teachers with specific, actionable feedback, which contributes to the feeling that the framework is too abstract for practice. This lack of practical guidance can lead teachers to fall back on simpler, technocentric approaches, which in turn further muddies the conceptual understanding of what true integration looks like. Breaking this cycle requires a concerted research effort to address the foundational issue of conceptual ambiguity by developing clearer, operational definitions for the framework’s domains.

TPACK in the Landscape of Educational Technology Models

The TPACK framework does not exist in isolation. It is part of a broader landscape of models designed to help educators think about technology integration. A comparison with one of the other most prominent models, SAMR, is particularly useful for illuminating the unique philosophy and contribution of each.

Introducing the Contenders: TPACK and SAMR

As established, TPACK is a knowledge framework that describes the integrated set of knowledge domains—Content, Pedagogy, and Technology—that a teacher needs to possess for effective practice.

The SAMR model, developed by Dr. Ruben Puentedura, is a different type of framework. It is a hierarchical model used to classify and evaluate the level of technology integration in a specific learning activity. It consists of four levels:

- Substitution: Technology acts as a direct substitute for a traditional tool, with no functional change to the task. An example is students typing an essay instead of handwriting it.

- Augmentation: Technology acts as a direct substitute but with functional improvements. An example is using a word processor’s spell-check and formatting tools while writing the essay.

- Modification: Technology allows for a significant redesign of the task. An example is transforming the essay into a collaborative multimedia presentation posted online for peer feedback.

- Redefinition: Technology allows for the creation of new tasks that were previously inconceivable. An example is having students collaborate in real-time with a class in another country to produce a joint documentary on a shared topic.

A Comparative Analysis: Differing Philosophies and Foci

While both models aim to improve teaching with technology, their core philosophies, primary focus, and practical utility for teachers are fundamentally different. TPACK focuses on the teacher’s internal knowledge and reasoning process, asking, “What combination of knowledge is needed to design this lesson effectively?” In contrast, SAMR focuses on the external, observable student task, asking, “How is technology changing the nature of this activity?”

This leads to different structures. TPACK is a holistic, non-hierarchical framework represented by a Venn diagram, emphasizing the interplay of knowledge domains. SAMR is a prescriptive, four-level hierarchy, providing a ladder for evaluating the transformative impact of technology. TPACK’s strength lies in its comprehensive, theoretically grounded approach that connects technology to the core tenets of teaching. Its primary limitation is its perceived abstractness and the difficulty of putting it into practice. SAMR’s strength is its simplicity and its utility as a clear, evaluative tool for reflection. Its main weakness is its potential to oversimplify the complex dynamics of a classroom and its lack of a robust, peer-reviewed theoretical foundation.

The debate is often framed as “TPACK vs. SAMR,” implying that an educator must choose between them. However, a more sophisticated understanding reveals that the models are not competitive but complementary; they simply answer different questions about the same phenomenon. A teacher can use the TPACK framework during the

planning phase to ensure their instructional design is sound—that the technology, pedagogy, and content are purposefully aligned. Subsequently, they can use the SAMR model during the reflection phase to evaluate the nature of the task they created and consider how it might be enhanced. For instance, a teacher with strong TPACK might intentionally design a lesson at the “Substitution” level because it is the most pedagogically appropriate choice for a specific learning goal. Conversely, a lesson could reach the “Redefinition” level but still reflect poor TPACK if the novel task is disconnected from the content objectives or is pedagogically unsound. The most effective practice involves using both models in concert: TPACK to guide thoughtful design and SAMR to prompt critical reflection.

Table 3: Comparative Analysis of TPACK and SAMR Models

| Dimension | TPACK (Technological Pedagogical Content Knowledge) | SAMR (Substitution, Augmentation, Modification, Redefinition) |

| Core Philosophy | A framework of teacher knowledge. | A model of technology use levels. |

| Primary Goal | To describe the nature of knowledge required for effective teaching with technology.. | To evaluate and classify the degree of technology integration in a task. |

| Focus | The teacher’s internal, integrated knowledge and reasoning process. | The external, observable student task and its transformation. |

| Structure | Holistic, non-hierarchical Venn diagram representing the interplay of domains. | Prescriptive, four-level hierarchy from enhancement to transformation. |

| Practical Utility | A reflective tool for planning and designing instruction.. | An evaluative tool for classifying and reflecting on activities. |

| Key Strength | Comprehensive and theoretically grounded in the complexities of teaching. | Simple, clear, and provides a practical ladder for reflection and goal-setting. |

| Key Limitation | Can be abstract and difficult to measure or apply directly in practice. | Oversimplifies the complexity of teaching; has a weaker theoretical foundation. |

Future Directions and Recommendations for Practice

The TPACK framework has undeniably shaped the discourse on technology integration in education for over a decade. To ensure its continued relevance and increase its practical impact, future efforts must focus on refining the framework’s conceptual clarity, transforming professional development practices, and fostering supportive educational ecosystems.

Refining the Framework: The Path to TPACK 2.0

The future vitality of TPACK as a research construct depends on addressing its core conceptual challenges.

- Clarifying the Constructs: The highest priority for the research community should be to address the “fuzzy boundaries” problem. This requires developing clearer, empirically grounded, and operational definitions for the intersecting knowledge domains, particularly TCK, TPK, and TPACK itself, to allow for more reliable measurement and a more coherent body of research.

- Systematizing Context (XK): Researchers must move beyond simply acknowledging the importance of context. Future work should focus on developing more systematic and robust models of how specific contextual factors at the micro, meso, and macro levels interact with and shape a teacher’s TPACK.

- Addressing Broader Factors: The framework must evolve to more explicitly incorporate contemporary challenges and considerations, such as the ethical implications of educational technologies, issues of digital equity and access, and the affective and social-emotional dimensions of learning in technology-mediated environments.

Recommendations for Teacher Education and Professional Development

To translate TPACK theory into meaningful practice, teacher education and professional development programs must shift their approach.

- Move Beyond Technocentric Training: The evidence strongly suggests that isolated, skills-based technology workshops are ineffective because they disregard the integrated nature of teacher knowledge. Programs should cease offering training on “how to use Tool X” in a vacuum.

- Embrace Learning by Design: Professional development should be structured around a “Learning by Design” model, where teachers work in collaborative, content-alike teams to solve authentic pedagogical problems from their practice. This approach develops PCK and TPACK simultaneously by situating learning in the complex reality of instructional design.

- Foster Reflective Practice: Professional development must be an ongoing process that incorporates structured reflection. Using tools like teaching portfolios, case study development, and peer observation with reflective debriefs can help teachers articulate, analyze, and ultimately refine their TPACK over time..

Recommendations for Educational Leaders and Policymakers

The development of teacher TPACK is not solely the responsibility of the individual teacher; it requires a supportive ecosystem cultivated by educational leaders and policymakers.

- Create a Supportive Ecosystem: Leaders must recognize that developing sophisticated TPACK requires significant resources. This includes providing up-to-date technology and robust technical support, but most critically, it requires providing teachers with the most valuable resource: dedicated, structured time for collaborative planning, professional learning, and experimentation.

- Rethink Evaluation: Teacher evaluation systems must evolve to recognize and reward thoughtful, nuanced technology integration (i.e., strong TPACK) rather than superficial use. This means moving beyond simple checklists of technology usage and adopting more sophisticated assessment methods, such as portfolio reviews or observation protocols that are coupled with conversations about pedagogical reasoning.

- Adopt a “Wicked Problem” Mindset: Leaders must abandon the search for a single, scalable, top-down “solution” for technology integration. Instead, they should adopt the mindset that they are managing a “wicked problem”. This requires fostering a school culture that values professional inquiry, tolerates risk-taking, and supports continuous, iterative improvement, thereby empowering teachers as the expert designers of learning within their unique contexts.

References

- The Seven Components of TPACK Diagram – Quizlet. https://quizlet.com/482826930/the-seven-components-of-tpack-diagram/

- Pedagogical Content Knowledge | Gatsby – Improving Technical Education. https://www.improvingtechnicaleducation.org.uk/teacher-education/your-journey/subject-leader/pedagogical-content-knowledge

- Pedagogical Content Knowledge (PCK) – ERIC. https://files.eric.ed.gov/fulltext/EJ1085915.pdf

- Pedagogical Content Knowledge | Theory and Models – Structural Learning. https://www.structural-learning.com/post/pedagogical-content-knowledge

- Pedagogical Content Knowledge: Teachers’ Integration of Subject Matter, Pedagogy, Students, and Learning Environments | NARST – National Association for Research in Science Teaching. https://narst.org/research-matters/pedagogical-content-knowledge

- Pedagogical Content Knowledge. https://www.wcu.edu/WebFiles/PDFs/Pedagogical_Content_Knowledge_EncyclopediaofEducation.pdf

- Pedagogical Content Knowledge- What Matters Most in the Professional Learning of Content Teachers in Classrooms with Diverse Student Populations – IDRA. https://www.idra.org/resource-center/pedagogical-content-knowledge/

- TPACK: Technological Pedagogical Content Knowledge Framework. https://educationaltechnology.net/technological-pedagogical-content-knowledge-tpack-framework/

- Pedagogising Virtual Reality Technology — a New Perspective on the TPACK Framework. https://journals.oslomet.no/index.php/nordiccie/article/download/5254/4626/27595

- What Is Technological Pedagogical Content Knowledge? – CITE Journal. https://citejournal.org/volume-9/issue-1-09/general/what-is-technological-pedagogicalcontent-knowledge/

- What Is Technological Pedagogical Content Knowledge (TPACK)? – matthew j. koehler, punya mishra, and william cain, michigan state university. https://www.bu.edu/journalofeducation/files/2014/02/BUJoE.193.3.Koehleretal.pdf

- Technological pedagogical content knowledge – Wikipedia. https://en.wikipedia.org/wiki/Technological_pedagogical_content_knowledge

- Technological Pedagogical Content Knowledge (TPACK): The Development and Validation of an Assessment Instrument for Preservice Teachers – ERIC. https://files.eric.ed.gov/fulltext/EJ868626.pdf

- The effectiveness of interactive digital content based on the TPACK model in developing the skills of educational aids productio. https://www.cedtech.net/download/the-effectiveness-of-interactive-digital-content-based-on-the-tpack-model-in-developing-the-skills-16046.pdf

- The TPACK Technology Integration Framework. https://edtechbooks.s3.us-west-2.amazonaws.com/pdfs/540/14786.pdf

- TPACK Model: Framework Explanations, Components, Best Practices and Examples. https://blog.heyhi.sg/tpack-model-framework-explanations-components-best-practices-and-examples/

- (PDF) Cultivating Reflective Practitioners in Technology Preparation: Constructing TPACK through Reflection – ResearchGate. https://www.researchgate.net/publication/307758181_Cultivating_Reflective_Practitioners_in_Technology_Preparation_Constructing_TPACK_through_Reflection

- TPACK model explained with examples for the classroom – Nearpod. https://nearpod.com/blog/tpack/

- The Technological Pedagogical Content Knowledge Framework – Punya Mishra. https://www.punyamishra.com/wp-content/uploads/2013/08/TPACK-handbookchapter-2013.pdf

- Introducing TPACK Technological Pedagogical Content Knowledge Punya Mishra & Matthew Koehler Michigan State University What. https://www.punyamishra.com/wp-content/uploads/2015/01/TPACK-Handout.pdf

- Testing a TPACK-Based Technology Integration Assessment Rubric – Learning Activity Types. https://activitytypes.wm.edu/Assessments/HarrisGrandgenettHofer-TPACKRubric.pdf

- TPACK & Wicked Problems | elketeaches – WordPress.com. https://elketeaches.wordpress.com/2020/07/08/tpack-wicked-problems/

- The TPACK Framework Explained (With Classroom Examples) – PowerSchool. https://www.powerschool.com/blog/the-tpack-framework-explained-with-classroom-examples/

- Situating TPACK: A Systematic Literature Review of Context as a Domain of Knowledge. https://citejournal.org/volume-22/issue-4-22/general/situating-tpack-a-systematic-literature-review-of-context-as-a-domain-of-knowledge/

- “TPACK Stories”: Schools and School Districts Repurposing a Theoretical Construct for Technology-Related Professional Development – W&M ScholarWorks. https://scholarworks.wm.edu/cgi/viewcontent.cgi?article=1077&context=educationpubs

- SAMR and TPACK: Two models to help with integrating technology into your courses | Resource Library | Taylor Institute for Teaching and Learning | University of Calgary. https://taylorinstitute.ucalgary.ca/resources/SAMR-TPACK

- (PDF) TPACK FRAMEWORK: CHALLENGES AND OPPORTUNITIES IN EFL CLASSROOMS – ResearchGate. https://www.researchgate.net/publication/339597146_TPACK_FRAMEWORK_CHALLENGES_AND_OPPORTUNITIES_IN_EFL_CLASSROOMS

- Developing TPACK with Learning Activity Types. https://activitytypes.wm.edu/HoferHarris-DevelopingTPACKWithLearningActivityTypes.pdf

- TPACK model: Strategies for effective educational technology integration | Hāpara. https://hapara.com/blog/tpack-model-strategies-for-edtech-integration/

- TPACK: Blending Learning for Effective Teaching – RTI International. https://www.rti.org/insights/leaders-tpack-blended-learning-effective-teaching-and-learning

- Students as Investigators: Learning About the People of the Civil War. https://citejournal.org/proofing/7414-2/

- Civil War Curriculum Lesson Plans: High School | American Battlefield Trust. https://www.battlefields.org/learn/educators/curriculum/civil-war-curriculum-lesson-plans-high-school

- Tpack planning | PPT – SlideShare. https://www.slideshare.net/slideshow/tpack-planning/43836757

- Lesson Plan | PDF | Photosynthesis | Simulation – Scribd. https://www.scribd.com/document/671090708/Lesson-Plan-1

- Lesson Plan | Photosynthesis Seen From Space – California Academy of Sciences. https://www.calacademy.org/educators/lesson-plans/photosynthesis-seen-from-space

- Blended Learning: An Exploration of TPACK – SlideShare. https://www.slideshare.net/slideshow/blended-learning-an-exploration-of-tpack/76140486

- Putting TPACK on the Radar: A Visual Quantitative Model for Tracking Growth of Essential Teacher Knowledge. https://citejournal.org/volume-15/issue-1-15/current-practice/putting-tpack-on-the-radar-a-visual-quantitative-model-for-tracking-growth-of-essential-teacher-knowledge/

- Examining the limitations and critiques of the TPACK framework …. https://www.researchgate.net/post/Examining_the_limitations_and_critiques_of_the_TPACK_framework

- A rubric for assessing teachers’ lesson activities with respect to TPACK for meaningful learning with ICT – Australasian Journal of Educational Technology. https://ajet.org.au/index.php/AJET/article/download/228/781/5038

- Assessing Teachers’ TPACK – TPACK.ORG – Dr Matthew J Koehler. https://matt-koehler.com/tpack2/assessing-teachers-tpack/

- View of TPACK and Teachers’ Self-Efficacy: A Systematic Review. https://cjlt.ca/index.php/cjlt/article/view/28280/20743

- The EnTPACK rubric: development, validation, and reliability of an instrument for measuring pre-service science teachers’ enacted TPACK – Frontiers. https://www.frontiersin.org/journals/education/articles/10.3389/feduc.2023.1190152/full

- PORTFOLIO ASSESSMENT OF TPACK … – ResearchGate. https://www.researchgate.net/publication/353796294_PORTFOLIO_ASSESSMENT_OF_TPACK_APPROACH-BASED_MATHEMATICS_SUBJECTS_IN_PRIMARY_SCHOOLS/fulltext/6390e1e1095a6a77740e8044/PORTFOLIO-ASSESSMENT-OF-TPACK-APPROACH-BASED-MATHEMATICS-SUBJECTS-IN-PRIMARY-SCHOOLS.pdf

- A performance assessment of teachers’ TPACK using artifacts from digital portfolios – Dr Matthew J Koehler. https://www.matt-koehler.com/publications/rosenberg_et_al_2015.pdf

- Building a Portfolio Assessment System for ELLs | Virginia is for Teachers. https://www.virginiaisforteachers.com/2015/09/building-portfolio-assessment-system.html

- (PDF) PORTFOLIO ASSESSMENT OF TPACK APPROACH-BASED MATHEMATICS SUBJECTS IN PRIMARY SCHOOLS – ResearchGate. https://www.researchgate.net/publication/353796294_PORTFOLIO_ASSESSMENT_OF_TPACK_APPROACH-BASED_MATHEMATICS_SUBJECTS_IN_PRIMARY_SCHOOLS

- TPACK in action: A study of a teacher educator’s thoughts when planning to use ICT. https://ajet.org.au/index.php/AJET/article/download/3071/1423/11399

- TPACK vs SAMR: Key Differences Between 2 Tech Frameworks – YouTube. https://www.youtube.com/watch?v=JVq4F36b8gM

- Full article: A systematic literature review of Technological, Pedagogical and Content Knowledge (TPACK) in mathematics education: Future challenges for educational practice and research. https://www.tandfonline.com/doi/full/10.1080/2331186X.2023.2269047

- Tpack framework: challenges and opportunities in efl classrooms – SciSpace. https://scispace.com/pdf/tpack-framework-challenges-and-opportunities-in-efl-rpbg47zro4.pdf

- Applying Technological Pedagogical Content Knowledge (TPACK) framework to geography online learning: What can teachers do?. https://www.tandfonline.com/doi/pdf/10.1080/2331186X.2025.2482395

- TPACK FRAMEWORK: CHALLENGES AND OPPORTUNITIES IN EFL CLASSROOMS. https://www.ejournalugj.com/index.php/RILL/article/view/2763

- Chapter 3: Models, Methods, and Modalities – EdTech Books. https://edtechbooks.org/integrating_technology/modfels_methods_and_/simple

- Comparison of TPACK Vs SAMR Model: Which One is Better? – HeyHi. https://blog.heyhi.sg/comparison-of-tpack-vs-samr-model-which-one-is-better/

- TPACK or SAMR or TIM as the most preferred technology integration framework?. https://www.researchgate.net/post/TPACK-or-SAMR-or-TIM-as-the-most-preferred-technology-integration-framework

- SAMR vs. TPACK – CYCLES OF LEARNING. https://www.cyclesoflearning.com/blog/online-teaching-reflection-day-29